YouTube recently made an announcement regarding its stance on artificial intelligence (AI)-generated content on the platform. It has implemented new measures to ensure transparency and disclosure when videos have been altered or generated using AI tools. This move comes in light of the platform’s updated content policy in November 2023.

As part of its efforts to combat AI-generated content, YouTube has introduced a new tool that will require creators to indicate when their videos have been meaningfully altered or synthetically generated to appear realistic. This disclosure process has been integrated into the video-uploading workflow to make it easier for creators to add the necessary label.

Creators will now find a new section titled “Altered Content” in the uploading workflow, where they must respond to three key questions. These questions pertain to whether the video portrays individuals saying or doing things they did not, alters footage of real events or places, or includes realistic-looking scenes that did not occur. If any of these elements are present, creators must mark ‘Yes’, and YouTube will automatically add a label in the video description.

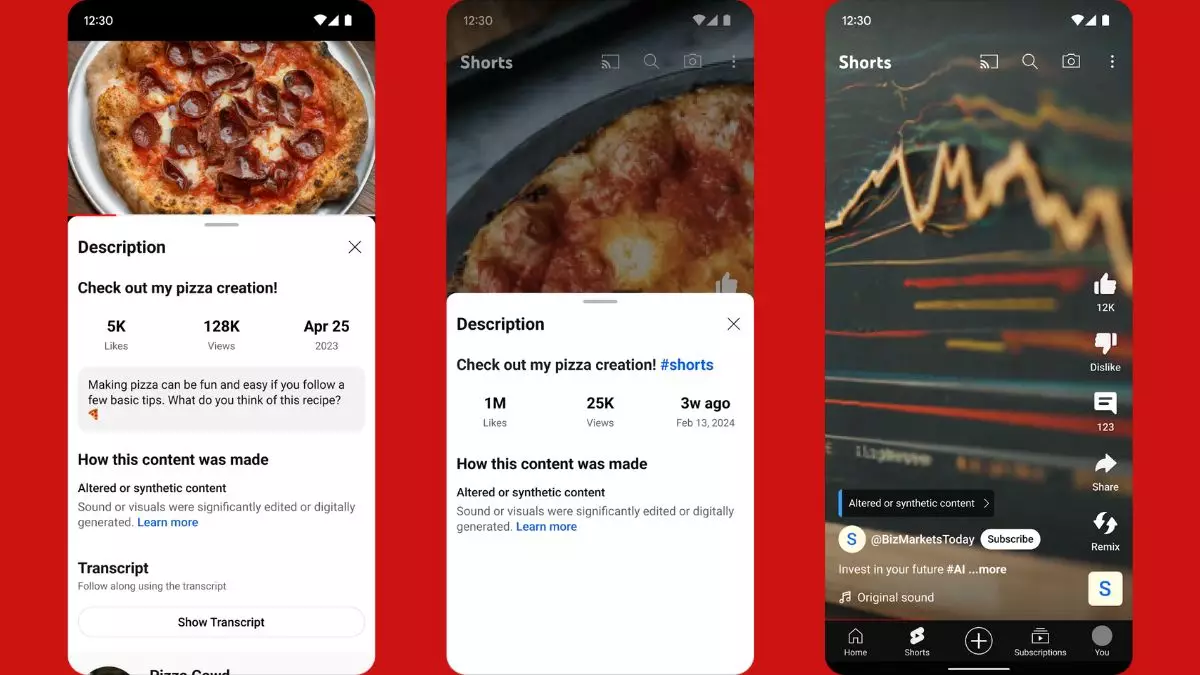

The new labels for AI-generated content will be visible on both long-format videos and Shorts. For Shorts, a more prominent tag will be placed above the channel’s name. Initially, viewers will see these labels on the Android and iOS apps, with plans to extend the feature to the web interface and TV. Creators will first encounter the workflow on the web interface.

YouTube has made it clear that failure to disclose AI-generated content will result in penalties. While creators will have a grace period to familiarize themselves with the new requirements, penalties may include content removal, suspension from the YouTube Partner Programme, and more.

YouTube’s decision to crack down on AI-generated content stems from the increasing prevalence of deepfakes. The updated content policy aims to address these concerns by introducing disclosure tools and providing viewers with the option to request the removal of AI-generated or synthetic content that impersonates identifiable individuals. Additionally, measures have been implemented to safeguard the content of music labels and artists.

By taking proactive steps to tackle AI-generated content, YouTube is prioritizing transparency and accountability within its platform. These new measures aim to protect users from misinformation and deceptive practices, ultimately enhancing the overall content quality on YouTube.

Leave a Reply