Quantum computing stands at the forefront of technological innovation, promising to revolutionize how we approach computational problems that are insurmountable with classical systems. However, despite significant advances, the stability and reliability of quantum bits, or qubits, have continued to pose challenges. Google’s recent launch of a quantum chip known as Willow showcases the company’s strides in overcoming these limitations, particularly in error correction. This article delves into the implications of Willow, the nature of qubit technology, and the broader perspective on the future of quantum computing.

At the core of quantum computing are qubits, which diverge fundamentally from classical bits. Traditional bits can only exist in one of two states: 0 or 1. On the other hand, qubits can exist in multiple states simultaneously due to the phenomenon of superposition. This unique property allows quantum computers to process an immense amount of information simultaneously, making them exceptionally powerful for certain types of calculations. Nevertheless, this power comes at a cost; qubits are fragile and susceptible to errors stemming from environmental interactions. They can decohere when they encounter noise, leading to the loss of their quantum state.

Up to now, quantum devices have achieved impressive reliability metrics, attaining about 99.9% accuracy. However, for practical, large-scale quantum computing, an error rate of one per trillion operations is necessary. Achieving such precision is a formidable challenge that researchers have struggled with since the inception of quantum error correction methods in the 1990s.

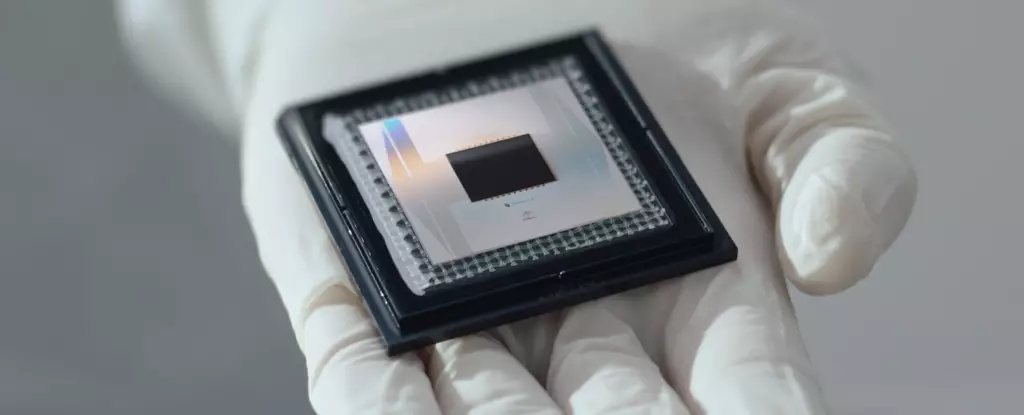

Google’s Willow represents a significant evolution in addressing error rates in quantum systems. By utilizing an architecture with 105 physical qubits, the chip enables researchers to demonstrate a groundbreaking achievement in stabilizing qubits over extended periods. Remarkably, Willow has managed to maintain a single logical qubit stable enough to experience errors only once per hour—a stark contrast to previous systems that faltered every few seconds.

What sets Willow apart is the way it organizes and employs its physical qubits. By expanding the encoded qubits from a 3×3 to larger lattices of 5×5 and 7×7, researchers have succeeded in exponentially reducing the error rate. This efficient scaling indicates that increasing the number of physical qubits can directly improve error correction effectiveness, which is a critical factor for building more robust quantum systems.

A New Era in Quantum Algorithms

With enhanced error correction, the potential applications of quantum computing grow significantly. Research scientists from Google’s Quantum AI team, Michael Newman and Kevin Satzinger, emphasize that the stability achieved through Willow highlights a pivotal advancement toward realizing large-scale quantum applications. They note, “This demonstrates the exponential error suppression promised by quantum error correction, a nearly 30-year-old goal for quantum computing.” The implications of this progress are immense, as it not only highlights the potential for solving complex problems but also sets the stage for future innovations in quantum algorithm development.

One illustrative example of Willow’s capabilities is its ability to perform certain tasks in just five minutes that would take classical supercomputers an astronomical 10 septillion years. While this task was specifically designed for quantum processors, it serves as a compelling demonstration of the transformative potential of quantum technology.

The Road Ahead: Challenges and Opportunities

Despite the progress evidenced by Willow, a notable gap remains between the present capabilities of quantum systems and the lofty aspirations for practical quantum computing. Achieving the required error rates for widespread application necessitates not only advances in hardware and increased qubit counts but also continuous refinement of algorithms. As Newman and Satzinger aptly state, “Quantum error correction looks like it’s working now, but there’s a big gap between the one-in-a-thousand error rates of today and the one-in-a-trillion error rates needed tomorrow.”

While Google’s Willow is a promising step forward in quantum computing, it underscores the ongoing challenges that researchers face. The quest to stabilize qubits and achieve error rates conducive to practical applications is far from over. However, Willow’s introduction marks an important benchmark in this journey, opening new avenues for exploration and innovation in quantum technologies. As researchers continue to bridge the gap between theory and reality, the future of quantum computing holds boundless potential that could redefine computational paradigms forever.

Leave a Reply